December 22, 2025

Robert “RSnake” Hansen

I had a very interesting conversation with a good friend of mine, and he asked, “Do you have any new insights into the CVSS 4.0 usage problem, specifically the fact that nobody is using it correctly and no vulnerability scanning or management companies allow their users to add the critical environmental and threat metrics?” Boy, what a great question! There are other takes that are worth a read as well, and I don’t know all of the reasons, but I do have some theories, so here goes my answer.

V4 arrived with a kind of forced confidence, as if the additional structure alone would redeem the problems that have piled up for years. However, the model is harder to use than V3 because it has quite a few more variables that need to be inputted to calculate a score than V3 did, which is both a technical issue and a UI one.

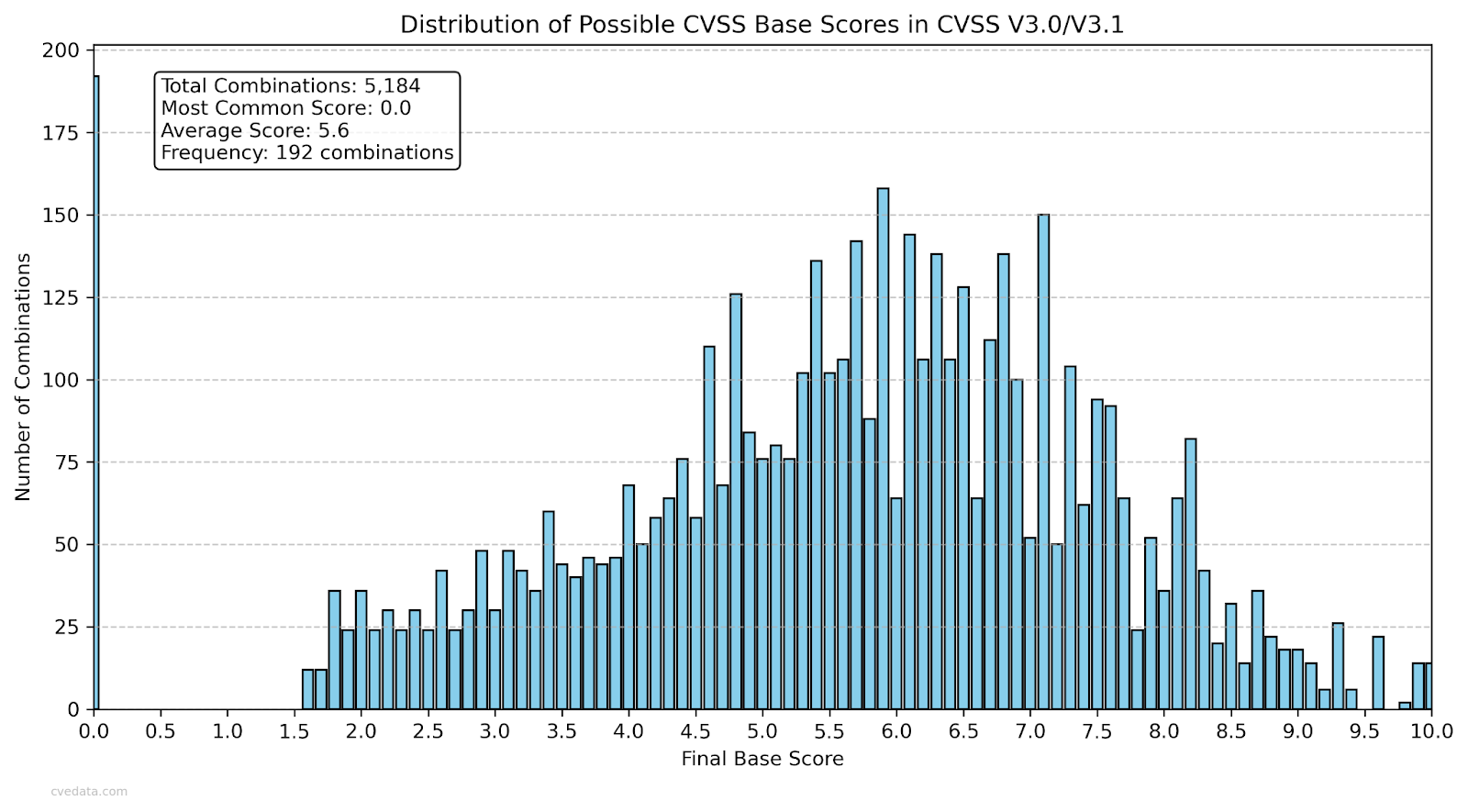

Graph 1: Possible outcomes from 0-10 in CVSS V3

Graph 2: Possible outcomes from 0-10 in CVSS V4

Next, its upper range of potential outcomes from 0-10 is greater in V3 (Graph 1) vs V4 (Graph 2). Both of those graphs are static graphs, showing all possible combinations mapped out for each version of the base score. In version 3 you see a big chunk of potential values on the middle to higher end of the graph, and with version 4 there is a lot missing, all the way up to the value 4.0. More importantly, it shows that CVSS V4 has fewer potential numbers from 0-10 that can be reached due to the way the calculator works. There are just some base score values that cannot be reached by virtue of how the calculator works. More combinations of inputs in the calculator lead to fewer reachable scores. Fewer scores, if you remove the possible impossible scores, narrows the distance between results. For instance, 2.4 is right next to 4.0 mathematically in CVSS V4 due to the way the calculator works. Therefore, there is less granularity in the base score or any score you might create in V4 than in V3. That creates a system that looks more precise on paper due to the far greater amounts of inputs between versions, while giving analysts less resolution in practice.

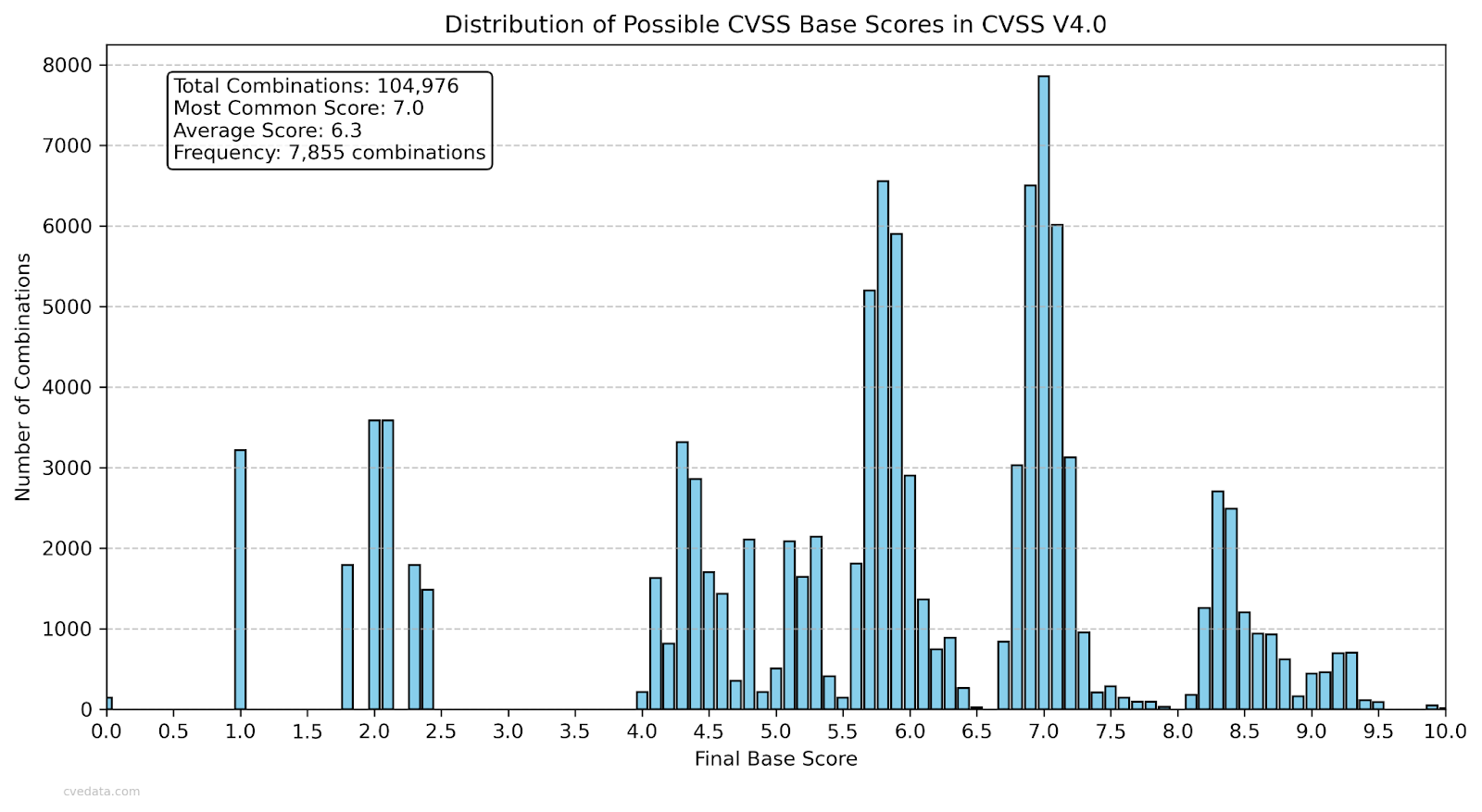

Graph 3: All combinations between 0-10 for all versions of CVSS

And not just a little less. In Graph 3 you can see that in CVSS V3 the calculator can reach 84 different numbers from 0-10, while CVSS V4 can only reach 63 combinations from 0-10. That’s a huge reduction in potential combinations, meaning that you’ll see a lot more vulnerabilities with the same score, despite being a much more complicated calculator with more factors captured. As a side note, the reason why the percent is slightly lower than the number is because the 0-10 when split by 0.1 is 101 combinations, not 100, making percentages a bit off, but that is the same between versions.

Then there is also the technical debt of accumulated design choices that the vulnerability management companies have already made in their products. The interface patterns, the scoring paths, the database design, the dependencies under the surface, all of it has grown in ways that make adoption painful. There is also the fact that their customers also will have their own technical debt based on the APIs the vendors published. The vendors cannot magically wave a wand and get all their customers to update their API, just because a new version of CVSS comes out. You cannot bolt V4 in place of V3 and get the same operational flow, let alone the same string lengths even. For instance, these two CVSS strings below are quite different in length, so you can’t just use the same database columns you did before if you used a fixed width:

CVSS V3: AV:A/AC:H/PR:L/UI:R/S:U/C:L/I:L/A:L/CR:L/IR:L/AR:L/MAV:A/MAC:L/MPR:N/MUI:N/MS:U/MC:N/MI:N/MA:N

CVSS V4: AV:A/AC:L/AT:P/PR:N/UI:N/VC:H/VI:L/VA:N/SC:H/SI:H/SA:L/E:A/CR:H/IR:H/AR:H/MAV:N/MAC:L/MAT:N/MPR:N/MUI:N/MVC:H/MVI:H/MVA:H/MSC:H/MSI:S/MSA:S/S:N/AU:N/R:A/V:D/RE:L/U:Clear

The churn that follows a change from V3 or V4, for most companies, will not be brief or simple, and customers will also need to be trained on it. Most vendors already regard CVSS as something they tolerate rather than something that serves them. Asking them to rebuild their UIs and processes around a more complex version is, at best, wishful.

However, I think all of that is minor and could be dealt with if the carrots were worth it, or if the sticks were big enough.

The deeper problem sits inside the philosophy of base scores. When you calculate a CVSS string it automatically creates a default base score even without the environmental and threat variables, because the designers know that very few people will compute every vector for every issue. That default score then becomes the entire product. Vendors lean on it because they do not have the time or appetite to push the burden onto the user. The working group dislikes this behavior and insists the model is intended to be used with additional environmental context, but they created the path of least resistance. Users take the path that exists, not the path that is preached.

If you hand a kid a pile of candy and walk away, of course they’re going to eat it, even if you click your tongue at the fact that they’ll get cavities and eventually diabetes. You cannot give kids unfettered access to candy and expect them not to eat it. That is what base scores are for security vendors… candy!

Advocates of V4 imagine that most people would perform careful scoring if only they understood its value, but nothing in the last twenty years of vulnerability management technology supports that belief. The industry has been focused on and is rewarded by vulnerability volume. Scan more targets. Find more issues than the other guy. Nothing about that screams quality of vulnerability scoring output, only quantity.

So why would a vendor upgrade when V3 already gives them base scores? What meaningful upside could there be, when they never cared in the first place about those scores?

In bake offs, scanners have historically been judged on depth and breadth of coverage, not on whether the output meaningfully reflects real risk. The base score is entirely sufficient. Anything more involved, like something that requires users to enter data into a form for every vulnerability, is pointless. Worse than that, it creates customer friction and might even cost the vendors money.

If the CVSS designers spent time with the product leaders at the vendors that actually implement this work, they would have seen the same thing. Almost no vendor uses CVSS correctly, but more importantly, almost no vendor intends to start. The incentives do not point in that direction and the new version does not change those incentives. If anything, V4 makes things more difficult for vendors and customers.

Even if V4 is more refined in theory, it only matters when it is used as intended. CVSS V4 was deployed into a world that never intended to use it properly, and with the existence of base score, there is really no incentive to change it. Even if a future CVSS V5 shipped without a base score, I suspect it would see even less adoption than V4. There’s no obligation to update, so most organizations would comfortably stick with whatever they are doing now. Without a mandate to update, why would they?

One quote from the CVSS SIG was pretty telling, “I don't really see a compelling reason to move to 4.0 other than the value in staying current.” To re-state, this is from within the working group itself! If the working group doesn’t get value, why would others?

Or that’s my working theory anyway. 😂

My recommendation would be to do away with base score entirely in V5, because it’s a confusing mess of how the vendors have misused it, and require that vendors update to V5 when it’s available. I would also require the use of one or more threat and environmental data points to calculate scores, or the calculator will literally not work at all. Yes, that means the vendors are going to have to do more work to gather that information, programmatically, for the customer, but that is the only way that CVSS will be accurate. Trust me, I’m not holding my breath here on either of those recommendations.